Available online at www.sciencedirect.com

journal homepage: www.elsevier.com/locate/CLSR

The

information

infrastructures

of

1985

and

2018:

The

sociotechnical

context

of

computer

law

&

security

Roger

Clarke

a, b, c, ∗,

Marcus

Wigan

daXamaxConsultancyPtyLtd,Canberra,Australia bUniversityofNSWLaw,Sydney,Australia

cResearchSchoolofComputerScience,AustralianNationalUniversity,Canberra,Australia dPrincipalofOxfordSystematics,Melbourne,Victoria,Australia

a

r

t

i

c

l

e

i

n

f

o

Articlehistory: Keywords: Infrastructural resilience Consumer rights Human rights Accountability Income distributionSocietal and political resilience

a

b

s

t

r

a

c

t

This article identifies key features of the sociotechnical contexts of computer law and se- curity at the times of this journal’s establishment in 1985, and of its 200th Issue in 2018. The infrastructural elements of devices, communications, data and actuator technologies are considered first. Social actors as individuals, and in groups, communities, societies and polities, together with organisations and economies, are then interleaved with those tech- nical elements. This provides a basis for appreciation of the very different challenges that confront us now in comparison with the early years of post-industrialism.

0267-3649/© 2018 Xamax Consultancy Pty Ltd and Marcus Wigan. Published by Elsevier Ltd. All rights reserved.

1.

Introduction

The field addressed by Computer Law & Security Review (CLSR) during its first 34 volumes, 1985–2018, has developed within an evolving sociotechnical context. A multi-linear trace of that context over a 35-year period would, however, be far too large a topic to address in a journal article. This article instead compares and contrasts the circumstances that applied at the beginning and at the end of the period, without any system- atic attempt either to track the evolution from prior to current state or to identify each of the disruptive shifts that have oc- curred. This sacrifices developmental insights, but it enables key aspects of contemporary challenges to be identified in a concise manner.

The article commences by identifying what the authors mean by ‘sociotechnical context’. The two main sections then

∗Corresponding author at: Xamax Consultancy Pty Ltd, 78 Sidaway St, Chapman ACT 2611 Canberra, Australia. E-mailaddress:Roger.Clarke@xamax.com.au (R. Clarke).

address the circumstances of 1985 and 2018. In each case, consideration is first given to the information infrastructure whose features and affordances are central to the field of view, and then to the activities of the people and organisations that use and are used by information technologies. Implications of the present context are drawn, for CLSR, its contributors and its readers, but more critically for society.

The text can of course be read in linear fashion. Alterna- tively, readers interested specifically in assessment of con- temporary IT can skip the review of the state in 1985 and go directly to the section dealing with 2018. It is also feasible to read about the implications in Section 5 first, and then return to earlier sections in order to identify the elements of the so- ciotechnical context that have led the authors to those infer- ences.

https://doi.org/10.1016/j.clsr.2018.05.006

The focus of this article is not on specific issues or incre- mental changes, because these are identified and addressed on a continuing basis by CLSR’s authors. The concern here is with common factors and particularly with discontinuities – the sweeping changes that are very easily overlooked, and that only emerge when time is taken to step back and consider the broader picture. The emphasis is primarily on social impacts and public policy issues, because the interests of business and government organisations are already strongly represented in this journal and elsewhere.

2.

The

sociotechnical

context

The term ‘socio-technical system’ was coined by Trist and Emery at the Tavistock Institute in the 1950s, to describe sys- tems that involve complex interactions firstly among people and technology, and secondly between society’s complex in- frastructures and human behaviour ( Emery, 1959 ). The no- tion’s applicability extends from primary work systems and whole-organisation systems to macro-social systems at the levels of communities, sectors and societies ( Trist, 1981 ). Stud- ies that reflect the sociotechnical perspective take into ac- count the tension and interplay between the objectives of or- ganisations on the one hand, and humanistic values on the other ( Land, 2000 ).

The review presented in this article is framed within a so- ciotechnical setting. The necessarily linear representation of a complex system may create the appearance of prioritising some factors and accentuating some influences in preference to others, and even of creating the appearance of causal rela- tionships. Except where expressly stated, however, that is not the intention.

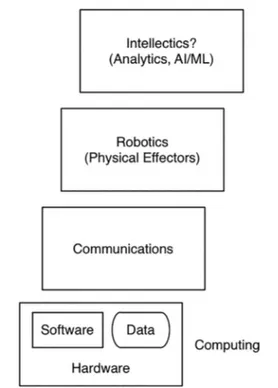

Technological factors are considered first. Information in- frastructure is discussed in sub-sections addressing each of the following four segments:

1. Devices, including both hardware and the software run- ning in processors embedded in them.

2. Communication technologies, both locally and over dis- tance.

3. Data, variously as raw material, work-in-process and man- ufactured product.

4. Means whereby action is taken in the real world. Rather than ‘robotics’, which carries many overtones, the expres- sion ‘actuator technologies’ is preferred.

The focus then turns to what are referred to here as ‘psycho-socio-economic factors’. For the 1985 context, the dis- cussion commences at the level of the individual human, then moves via small groups to communities and societies, and fi- nally shifts to economies and polities. The circumstances of 2018, on the other hand, are more usefully discussed by con- sidering those segments in a different sequence. In order to keep the reference list manageable, citations have been pro- vided primarily in relation to the more obscure and the possi- bly contentious propositions.

3.

1985

CLSR was established before the end of the Cold War, as the world’s population approached 5 billion. (By 2018, it had leapt by 50%). Ronald Reagan began his second term as US Pres- ident, Mikhail Gorbachev became the leader of the Soviet Union, Maggie Thatcher was half-way through her Premier- ship of the UK, Helmut Kohl was early in his 16-year term as Chancellor of Germany, and Nelson Mandela’s release was still 5 years away. The European Union did not yet exist, and its predecessor, the European Economic Community, comprised only 11 of the 28 EU members of 2018. Of more direct relevance to the topic of the article, Nintendo was released, the TCP/IP- based Internet was two years old, and adoption of the Domain Name System had only just commenced.

In 1985, academic books existed in the area of ‘computers and law’, notably Bing and Selmer (1980) and Tapper (1982) , and a range of articles had appeared in law journals. Although the first specialist journal commenced in 1969 (Rutgers Com- puter and Technology Law Journal), very few existed at the time of CLSR’s launch, and indeed ‘computers and law’ spe- cial issues in established journals were still uncommon. 3.1. Information infrastructure in 1985

An indication of the technology of the time is provided by an early-1985 special issue in the Western New England Law Re- view (7, 3), which included articles on legal protections for software, software contracts, computer performance claims, the law relating to bulletin board activities, computer crime, financial and tax accounting for software, and privacy. The ed- itorial referred also to the regulation of telecommunications and of the electromagnetic spectrum, regulation of banking records and of automated teller machines (ATMs), regulation of computer-related staff activities, the assessment of value of computer-readable data, software licensing cf. software sale, and taxation of services rather than goods.

The broader concepts of informatics, information technol- ogy (IT) and information infrastructure had yet to displace computing as the technical focus. Communications of the ACM in 1984–86 (Vols. 27–29) identified the topics of computer abuse, computer crime, privacy, the legal protection of com- puter software, software ‘piracy’, computer matching, trust- worthiness of software, cryptography, data quality and due process, hacking and access control. IEEE Computer (Vols. 17– 19) contained very little of relevance, but did add insurance against computer disasters, and offered this timeless encap- sulation of technological optimism: “The technology for im- plementing semi-intelligent sub-systems will be in place by the end of this century” ( Lundstrom & Larsen, 1985 ). Comput- ers & Security (still very early in its life, at Vols. 3–5) added security of personal workstations, of floppy diskettes and of small business computers, data protection, information in- tegrity, industrial espionage, password methods, EDP (elec- tronic data processing) auditing, emanation eavesdropping, and societal vulnerability to computer system failures.

The intended early focus of CLSR can be inferred from a few quotations from Volume 1. “’Hacking’ … has now spread … on an increasing and worrying scale … many of the computers on

which important information is filed are the large mainframes of the 1970s technological era” ( Saxby, 1985a , p.5). “Many com- panies [that transmit data to mainframes via telephone lines] do so on the basis of dedicated leased lines to which there is no external access” ( Davies, 1985 , p.4). “Most people will have heard of the Data Protection Act [1984] by now. However, you may not have begun to think about what it will require you to do. In order to avoid a last minute panic now is the time to start planning for its implementation” ( Howe, 1985 , p.11). “Where does the law stand in the face of [information being now set free from the medium in which it is stored]? The an- swer is in a somewhat confused state” ( Saxby, 1985b , p.i).

In Vol. 1, Issue 4, it was noted that “Specific areas to be addressed will include communications, the growth in ‘value added’ services and the competition to British Telecom”. Dur- ing the decade following the journal’s establishment, the long- standing State-run Postal, Telegraph and Telephone service agencies (PTTs) were in the process of being variously dis- membered, subjected to competition and privatised. The edi- torial continued “Also to be covered [are] the development of optical disk technology, its implications for magnetic media, and the development of international networking standards” (p.30). In 1, 5: “Licences [for a UK cellular radio service] were granted to two consortia … The licences stipulated a start date of January 1984 and required 90% of the population to be cov- ered by cellular radio by the end of the decade. … By November 1985 both licensees were claiming faster than expected mar- ket penetration” (p.18).

The first-named author conducted a previous study of electronic interaction research during the period 1988–2012 ( Clarke and Pucihar, 2013 ). In support of that work, an assess- ment was undertaken of the state of play of relevant infras- tructure in 1987 ( Clarke, 2012c ). Applying and extending that research, the features of information infrastructure are iden- tified under four headings.

3.1.1. Devices

Corporate devices were a mix of mainframes, in particular the mature era of the IBM 370 series, and mature mini-computers such as DEC VAX. Micro-computers were just beginning to eat into the mini-computer’s areas of dominance, with SUN Work- stations prominent among them. Corporate computers were accessed by means of ‘dumb terminals’, which were mostly light-grey-on-dark-grey cathode ray tubes, with some green or orange on black. Connections between micro-computers and larger devices became practicable during the 1980s, and colour screens emerged from the end of the 1980s; but flat screens only replaced bulky cathode-ray tubes (CRTs) from about 2000. Consumer devices were primarily desktop ‘personal com- puters’. These had seen a succession of developments in 1974, 1977 and 1980–1981, followed by the Apple Mac, which dur- ing the year prior to CLSR’s launch had provided consumer access to graphical user interfaces (GUIs). Data storage com- prised primarily floppy disks with a capacity of 800 K–1.44 MB. Hard disks, initially also with very limited capacity, gradually became economically feasible during the following 5–10 years. A few ‘luggable’ PCs existed. During the following decade, progress in component miniaturisation and battery technolo- gies enabled their maturation into ‘portables’ or ‘laptops’.

Handheld devices were only just emergent, in the form of Personal Digital Assistants (PDAs), but their functionality and their level of adoption both remained limited for a further two decades until first the iPhone in 2007, with its small screen but computer-like functions, and then the breakthrough larger- screen tablet, the iPad in 2010.

Systems software was still at the level of the MVS/CICS operating system (OS) on mainframes, VMS on DEC’s PDP 11 series mini-computers, Unix on increasingly capable micro- computers such as SUN Workstations, and PCDOS and Mac OS v.2 on PCs. Linux was not released until 1991. Wintel machines did not have a workable GUI-based OS until the mid-1990s.

Software development tools had begun with machine lan- guage and then assembler languages, whose structure was dictated by the machine’s internal architecture. By 1985, the dominant approach involved algorithmic/procedural lan- guages, which enable the expression of logical solutions to problems. So-called fourth generation languages (4GLs), whose structure reflects the logic of the problems themselves, had recently complemented these. The 5th generation was emergent in the form of expert systems, in which the logic is most commonly expressed in the form of rules ( Hayes-Roth et al., 1983 ).

A significant proportion of software development was orig- inally conducted within academic and other research con- texts. The approach tended to be collaborative in nature, with source-code often readily accessible and exploitable, as epit- omised by Donald Knuth’s approach with the TeX typeset- ting system c. 1982. The free software movement built on this legacy. Meanwhile, particularly from the time when the then-dominant mainframe supplier, IBM, ‘unbundled’ its soft- ware in 1969, corporations perceived the opportunity to make substantially greater profits by claiming and exercising rights over software. The free software and proprietary software ap- proaches have since run in parallel. A variant of free software referred to as ‘ open source’ software became well known in business circles from c. 1998.

Application software had reached varying levels of sophis- tication. At enterprise level, airline reservation systems, ATM networks and high-value international funds transfer (SWIFT) were well established, and EFT/POS systems were emergent. However, financial management systems (FMIS) were still al- most entirely intra-organisational. Enterprise resource plan- ning (ERP) business management suites, and most forms of supply chain system, only emerged during the following decade. Free-text search-capabilities existed, but only as the ICL Status and IBM STAIRS products, used for large-scale text- libraries, and available to few people. The majority of applica- tion software continued to be constructed in-house, although pre-written software packages had by then achieved signifi- cant market-share, and became prevalent for mainstream fi- nancial, administrative and business management applica- tions during the following two decades.

For personal computers, independent word processing and spreadsheet packages existed, but integrated office suites emerged only 5–10 years later c. 1990–1995. Many market seg- ments existed. For example, significant impetus to software development techniques had been provided by games from 1977 to 1985 and this has continued well into the present cen- tury. In the civil engineering field, an indication of the vol-

ume and sophistication of contemporary microcomputer ap- plications is provided by Wigan (1986) . Areas of strong growth in PC applications during the years immediately after CLSR’s launch included desktop publishing and the breakthrough implementation of hypertext in the form of Apple’s Hyper- Card ( Nelson, 1965; Nelson, 1980; Nielsen, 1990 ). The arrival of database management systems (DBMS) for PCs stimulated the development of more data-intensive PC-based applications. These began to be over-run by (application) software as a ser- vice (SaaS) from c. 2005. Text searching on PCs, and then on the Internet, did not start becoming available until a decade after CLSR’s launch. Although the concept of self-replicating code was emergent from the early 1970s, and the notion of a ‘worm’ originated in Brunner (1975) , the terms ‘trojan’ and ‘computer virus’ were only just entering usage at the time that CLSR launched, and the first widespread malware infestation, by the Jerusalem virus, occurred 2 years afterwards.

3.1.2. Communications

At enterprise level , local data communications – i.e. within a building, up to the level of a single corporate campus such as a factory, power plant, hospital or university – used var- ious proprietary local area network (LAN) technologies, in- creasingly 10 Mbps Ethernet segments, and bridges between them. Ethernet supporting 100 Mbps became available only in the mid-1990s and 1 Gbps from about 2000. Telecommu- nications (i.e. over distance) had been available in the form of PTT-run telegraph services from the 1840s and in-house in the form of telex (‘teleprinter exchange’) from the 1930s. Much higher-capacity connections were available, but they re- quired an expensive ‘physical private network’ or the services of a Value-Added Network (VAN) provider. The iron grip that PTTs held over telecommunications services was only gradu- ally released, commencing around the time of CLSR’s launch and continuing through the next decade. High-capacity fibre- optic cable was deployed in national backbones commencing in the mid-1980s, but substantial improvements in infrastruc- ture availability and costs took a further decade, by which time ADSL was being implemented over the notional ‘last mile’ to users’ premises using existing twisted-pair copper cables.

The Internet dates to the launch of the inter-networking protocol pair TCP/IP in 1983, but in 1985 its availability was largely limited to scientific and academic communities, primarily within the US. The Domain Name System was launched 3 months before CLSR’s first issue. However, the .uk domain, even though it was one of the first delegated, was not created until a few weeks after CLSR’s first Issue. The Internet only gradually became more widely available, commencing 8 years later in 1993, and the innovation that it unleashed began in the second half of the 1990s ( Clarke, 1994c, 2004a ).

The prospect existed of local electronic communications for small-business and personal use . Direct PC-to-PC con- nection was possible, and the 230 Kbps Appletalk LAN was available, but for Macintoshes only. Ethernet became econom- ically feasible for small business and consumers within a few years of CLSR’s launch, using 10BaseT twisted-pair cabling, but adoption was initially slow. Wireless local area networks (wifi) did not become available until halfway through CLSR’s first 35 years, from the early 2000s.

Telecommunications services accessible by individuals had begun with the telegraph in the 1840s for text, and the telephone in the 1870s for voice. In the years preceding CLSR’s launch, videotex became available, including in the UK as Prestel from 1979, and most successfully in France as Mini- tel – 1982–2012 ( Kramer, 1993; Dauncey, 1997 ). In 1985, practi- cal telecommunications support for small business and per- sonal needs comprised 2400 bps modems transmitting digital signals over infrastructure designed for analogue signals, and bulletin board systems (BBS). Given the very low capacity and unreliability, it was more common to transfer data by means of floppy disks, c. 1 MB at a time. It took a further decade, until the mid-1990s, for modem speeds to reach 56 Kbps – provided that good quality copper telephone cabling was in place. Early broadband services – in many countries, primarily ADSL – be- came economic for small business and consumers only during the late 1990s, c. 15 years after CLSR’s establishment.

Fax transmission was entering the mainstream. As mo- dem speeds slowly grew from 2.4 to 56 Kbps over the pe- riod 1985–1995, fax machine installations and traffic exploded. Then, from the mid-to-late 1990s, fax usage declined almost as rapidly as it had grown, as Internet-based email and email- attachments offered a more convenient and cheaper way to perform the same functions and more besides.

In 1985, analogue/1G cellular networks had only recently commenced operation, and supported voice only. 2G arrived only after a further 7–8 years, in the early 1990s, including SMS/‘texting’ from the mid-1990s. 3G arrived another decade later, in the early 2000s, and matured to offer data transmis- sion that was expensive and low-bandwidth. 4G followed an- other decade on, in the early 2010s, and 5G is emergent in 2018. In short, in 1985, handheld network-based computing was a quarter-century away.

3.1.3. Data

Data storage technology was dominated by magnetic disk for operational use and magnetic tape for archival. Optical data storage was emergent in the form of CDs, later followed by higher-density DVD format. The recording medium tran- spired to have a short viability of c. 5–10 years. Portable solid- state storage devices (SSDs) emerged from the early 1990s and became increasingly economic for widespread use from c.2000. Optical technology was quickly overtaken by ongoing improvements in hard disk device (HDD) capacity and eco- nomics, and by reductions in the cost of SSDs, and usage was in rapid decline by 2010.

In 1985, textual and numeric data formats used 7-bit ASCII encoding, which was limited to 128 characters and hence to the Roman alphabet without diacritics such as umlauts. Ap- ple’s Macintosh enabled the custom-creation of fonts from about the time of CLSR’s launch, bringing proportional fonts with it. However, the extended, 8-bit UTF-8 Standard, support- ing 256 characters and hence many of the variants of the Ro- man alphabet, became available only in the early 1990s, and did not achieve dominance on the Web until c. 2010. Commu- nications using all other character-sets depends on the use of multi-byte formats, which became available in standardised form later still.

For structured forms of data, data-management depended on a range of file-structures, with early forms of database

management system (DBMS) in widespread use. Relational DBMS were emergent as CLSR launched. Adoption at enter- prise level was brisk, but slower in small business and espe- cially in personal contexts. On the other hand, effective data management tools for sound, image and video were decades away.

Standardisation and interoperability of record-formats and documents had begun with Electronic Data Interchange (EDI) standards, which were in limited use for some business doc- uments before 1985, although primarily within the US. Stan- dardisation has proceeded in a desultory manner across many industries, but over an extended period. Since the emergence of the Web, both formal standards such as XML Document Type Definitions (DTDs) and ‘lite’ versions of data-structure protocols such as JavaScript Object Notation (JSON) have been applied. In 1985, AutoCAD was emergent, and was beginning to provide defactostandard data formats for design data.

Data-compression standards for audio were emergent in 1985 and matured in the early 1990s. Image compression, by means of the still-much-used .gif and .jpg formats, emerged in the years following the journal’s launch, with video compres- sion becoming available from the mid-1990s. The impetus for each was growth in available bandwidth sufficient to satisfy the geometrically greater needs of audio, image and video re- spectively.

3.1.4. Actuatortechnologies

Remotely operated garage-door openers date to the early 1930s, and parking-station boom-gate operations to the 1950s. Electro-mechanical, pneumatic and hydraulic means of act- ing on the real world were commonplace in industry during the mid-to-late 20th century. Computerised control systems that enabled the operation of actuators under program con- trol were emergent when CLSR began publication. They have matured, but most are by their nature embedded inside more complex artefacts, e.g. in cars, initially in fuel-mix systems but subsequently in many sub-systems, and in whitegoods, and heating and cooling systems.

In such areas as utilities (water, gas, electricity) and traf- fic management, supervisory control and data acquisition ( SCADA ) systems were well established in 1985. However, they operated on physical private networks, and hence these also attracted limited attention in law and policy discussions.

In secondary industry, at the time CLSR was launched in the mid-1980s, robotic tools were being integrated into auto- mobile production lines. The techniques have been adopted somewhat more widely since then, for example in large ware- house complexes and large computer data archives.

Among the kinds of systems that were more obvious to people , and that are more-often considered in discussions of ‘IT and law’, few included actuators in 1985. An example is ATMs, which were mainstream before 1985, and ETF/POS, which was emergent at that time, and was first addressed in the journal in 1987. These, depending on the particular im- plementation, may involve carriages that ferry cards into and out of the device, and the dispensing of cash and receipts. Over time, some other public-facing computer-based systems incorporated actuators, such as toll-road and parking-station boom-gates integrated with payments systems.

This review of the technical aspects of information in- frastructure in 1985 shows that multiple technologies were emerging, maturing and being over-run, in what observers of the time felt was something of a tumult ( Toffler, 1980 ). The impacts of these technologies on their users – and on other people entirely – came as something of a shock to them. At least at that stage, technology was driven by enthusias- tic technologists and then shaped by entrepreneurs. Their designs often paid limited attention to feedback from users, and were formed with even less feedforward from the as-yet insufficiently-imaginative public as to what people would like information technology to be and do, and to not be and not do.

3.2. Psycho-socio-economic factors in 1985

A key insight offered by the technosocial notion was the inter- dependence between technical and other factors. However, the driver of developments in the information society and economy of the mid-1980s was to a considerable extent the capabilities of new technologies. It was therefore appropriate to consider the technical aspects in the earlier section, and to move now to the other contextual factors, in order to docu- ment how the technologies were applied, and what impacts and implications they had. This sub-section begins by con- sidering the social dimension, from the individual, via small groups to large groups, and then shifts to the economic and political dimensions.

3.2.1. Individuals

In 1985, the main setting within which individuals interacted with IT was as employees within organisations . Given that the technology was expensive to develop and only moder- ately adaptable, and many workplaces were largely hierarchi- cal, workers were largely technology-takers. This was to some extent ameliorated by the then mainstream ‘waterfall’ system development process, which featured a requirements analysis phase and, within some cultures, participative elements in de- sign processes.

The prospect was emerging of impacts on individuals who were not themselves users of information infrastructure or participants in the relevant system. The term ‘ usees’ is a use- ful descriptor for such people ( Clarke, 1992; Fischer-Hübner and Lindskog, 2001; Baumer, 2015 ). Examples include data subjects in data collections for criminal intelligence and in- vestigation agencies, and in private sector records accumu- lated by credit bureaux and in insurance-claim and tenancy databases.

Considerable numbers of individuals were also beginning to interact with personal computing devices, originally as kits for the technically-inclined (1974), followed by the Apple II and Commodore PET for the adventurous (1977), after which the TRS-80 and others (1980), the IBM PC (1981) and Apple Macin- tosh (1984) brought them into the mainstream. The substan- tial surge in individual activities was only at its very beginning in 1985, but expectations at the time were high.

The notion of ‘ cyberspace ’ had been introduced to sci-fi afi- cionados by Gibson (1983), and popularised in his best-selling novel ‘Neuromancer’ (1985). To the extent that a literary de- vice can be reduced to a definition, its primary characteris- tic could be identified as ‘an individual hallucination aris-

ing from human-with-machine-and-data experience’ . No evi- dence has been found that there was any awareness of the no- tion in the then fairly small computers and law community in 1985. In the Web of Science collection, the first mention found is in 1989. The term was first explained to CLSR readers in 8, 2 ( Birch and Buck, 1992 ). Few references are found by Google Scholar even through the 1990s.

3.2.2. Groups

The earliest forms of computer-mediated (human) communi- cations (CMC) date to the very early 1970s, in the form of email and e-lists. The field that came to be known as computer- supported cooperative work (CSCW) was initiated by Hiltz and Turoff (1978) , and their electronic information exchange sys- tem (EIES) was further developed and applied ( Hiltz and Tur- off, 1981 ). The application of more sophisticated ‘groupware’ technology within group decision support systems (GDSS) was perceived as “a new frontier” as CLSR was launched ( DeSanctis and Gallupe, 1984 ), and was further researched and commer- cialised ( Dennis et al., 1988; Vogel et al., 1990 ). For a broader view of collaborative work through computers and over dis- tance, see Wigan (1989) .

Research and resources were focused on the workplace en- vironment , and impacts elsewhere were more limited. Bul- letin board systems (BBS) emerged in Chicago in 1978, Usenet at the University of North Carolina in 1979, and multi-user dungeons/domains (MUDs) at the University of Essex also in 1979. Internet relay chat (IRC) followed, out of Finland in 1988 ( Rheingold, 1994 ). However, only a very small proportion of the population used them, or had even heard of them. These technologies and their uses attracted attention in CLSR, with ‘electronic mail’ and ‘e-mail’ first mentioned in 1,1 (1985), BBS in 1987, newsgroups in 1989 (although Usenet not until 1995), ‘email’ in 1990, IRC in 1993 and MUDs in 2000. The exploita- tive world of ‘social media’ emerged in the form of ‘social net- working services’ only in 2002–2003 ( Clarke, 2004b ), with the first mention in the journal in 2006.

3.2.3. Communities

In 1985, the idea that information infrastructure could assist larger-scale groups to interact, coalesce or sustain their in- tegrity was yet to emerge. The Whole Earth ’Lectronic Link (the Well) was just being established, and hence virtual or eCom- munities, and even virtual enhancements to existing commu- nities, were at best emergent. Electronic support for commu- nities was limited to a few sciences. It had not even reached the computing professions let alone the less technically capa- ble participants in local, regional, ethnic, lingual and occupa- tional associations.

3.2.4. Societies

In 1985, only the very faintest glimmers of electronic support for whole societies was detectable. Among the constraints was the very slow emergence of convenient support for languages other than those that use the unadorned Roman alphabet. It is not easy to identify concrete examples of the informa- tion infrastructure of the mid-1980s having material impacts on whole societies. One somewhat speculative harbinger of change dates to shortly after CLSR’s launch. The implosion of the Soviet Union in 1989, and with it the Communist era,

can be explained in many ways. One factor was arguably that there was a need to take advantage of computing, and hence PCs were becoming more widely available. But PCs enabled ‘samizdat’ – inexpensive composition, replication and even distribution of dissident newsletters – which was inconsistent with the suppression of information that had enabled the So- viet system to survive. Among many alternative interpreta- tions, however, is the possibility that photocopying, which de- veloped from the 1950s onwards, was at least as significant a de-stablilising factor as PCs were.

3.2.5. Economies

Even in 1985, the economic impact of information infrastruc- ture had long been a primary focus. Given that this was, and has continued to be, much-travelled territory, it is not heavily emphasised here. A couple of aspects are worth noting, how- ever. EDI had begun enabling electronic transmission of post- transaction business-to-business (B2B) documentation, in the USA from the 1970s and in Europe by the mid-1980s. There was, however, no meaningful business-to-consumer (B2C) eCommerce until Internet infrastructure and web-commerce standards were in place. This was only achieved a decade after CLSR’s launch, from the 4th quarter of 1994 onwards ( Clarke, 2002 ).

At the time of CLSR’s launch, a current issue in various ju- risdictions was whether software was subject to copyright . It was held in only two countries that this was not the case – in Australia and Chile. Early forms of physical and software pro- tections for software were already evident in 1985, and were to become major issues mid-way through CLSR’s first 3 years. In 1984–1987, Stewart Brand encapsulated the argument as being that ‘information wants to be free, it also wants to be expen- sive; that fundamental tension will not go away’ ( Clarke, 2000 ). That conflict of interests has been evident ever since.

A further question current at the time was whether, and on what basis, software producers were, or should be, subject to liability for defects in their products and harm arising from their use ( Clarke, 1989a ). In various jurisdictions, it took an- other two to three decades before any such consumer protec- tions emerged.

At the level of macro-economics and the international or- der, IT was dominated by the USA and Europe. The USSR lagged due to its closed economy. Consumer electronics and motor vehicle manufacturing industries had been migrating to economies with lower labour costs, and Japan was partic- ularly dynamic in these fields at the time. In 1982, Japan ini- tiated an over-ambitious endeavour to catch up with the USA in IT design and manufacture , referred to as ‘Fifth Generation Computer Systems’ . Shortly before CLSR’s launch, this had “spread fear in the United States that the Japanese were going to leapfrog the American computer industry” ( Pollack, 1992 ).

3.2.6. Polities

The Internet’s first decade of public availability was marked by an assumption that anonymity was easily achieved, typ- ified by a widely-publicised cartoon published in 1993 that used the line ‘on the Internet, nobody knows you’re a dog’. Anonymity is perceived as a double-edged sword – undermin- ing accountability, but also enabling the exposure of corrup- tion and hypocrisy. However, computing technologies gener-

ally have considerable propensity to harm and even destroy both privacy and human freedoms more generally. Warnings had been continually sounded (e.g. Miller, 1972; Rosenberg, 1986; Clarke, 1994b ). This topic is further considered later.

4.

2018

The previous section has noted that the information infras- tructure available at the beginning of CLSR’s life was, in hind- sight, still rather primitive and constraining. Its impacts and implications were accordingly of consequence, but still some- what limited. Many of the topics that have attracted a great deal of attention in the journal during the last 35 years were barely even on the agenda in 1985.

This section shifts the focus forward to the present. The purpose is to identify the key aspects of the sociotechnical context within which CLSR’s authors and readers are working, thinking and researching at the time of the 200th issue. The discussion addresses the technical aspects within the same broad structure and sequence as before. In dealing with the human factors, on the other hand, a different sequence is used.

4.1. Information infrastructure in 2018

In each of the four segments used to categorise technologies, very substantial changes occurred during the last 35 years. Some of those changes are sufficiently recent, and may tran- spire to be sufficiently disruptive, that the technological con- text might be materially different again as early as 5–10 years from the time of writing. For the most part, however, this section concentrates on ‘knowns’, and leaves consideration of ‘known unknowns’ and speculation about ‘unknown un- knowns’ for the closing sections.

4.1.1. Devices

During the first 75 years of the digital world, 1945–2020, enter- prise computing has been dominated successively by main- frames, mini-computers, rack-mounted micro-computers, desktop computers, portables and handhelds. During the pe- riod 2000–2015, however, a great many organisations progres- sively outsourced their larger devices, their processing and their vital data. A substantial proportion of the application software on which organisations depend is now developed, maintained and operated by other parties, and delivered from locations remote to the organisation in the form of (Applica- tion) Software as a Service (SaaS). Most outsourcing was un- dertaken without sufficiently careful evaluation of the bene- fits, costs and risks, and achieved cost-savings that were in many cases quite modest, in return for the loss of in-house expertise, far less control over their business, and substan- tially greater dependencies and vulnerabilities ( Clarke, 2010b, 2012a, 2012b, 2013b ). The situation in relation to the smaller IT devices on which organisations depend is somewhat more complex, and is discussed below.

Commencing in the mid-to-late 1990s, users of consumer devices have come to expect the availability of connectiv- ity with pretty much anyone and anything, anywhere in the world. Many consumer devices take advantage of wireless

communications and lightweight batteries to operate unteth- ered, and hence are mobile in the sense of being operable on the move rather than merely in different places at different times.

The conception of consumer devices has changed signifi- cantly since the launch of the Apple iPhone in 2007. This was designed as an ‘ appliance ’, and promoted to consumers pri- marily on the basis of its convenience. With that, however, comes supplier power over the customer. The general-purpose nature and extensibility of consumer computing devices dur- ing the three decades 1977–2007 has been reduced to a closed model that denies users and third party providers the ability to vary and extend its functions, except in the limited ways permitted by the provider. This quickly became an almost uni- versal feature of phones and then of tablets, with Google’s An- droid operating environment largely replicating the locked- down characteristic of Apple’s iOS. At the beginning of 2018, the two environments comprehensively dominate the now- vast handheld arena, with 96% of phones and 98% of tablets.

A further aspect encourages suppliers to convert users from computers to mere appliances. During the period 2005– 2015, there was a headlong rush by consumers away from the model that had been in place during the first three decades of consumer computing c. 1975–2005. Instead of running soft- ware products on their own devices, and retaining control over their own data, consumers have become heavily, and even en- tirely, dependent on remote ( ‘ cloud ’ ) services run by corpora- tions, with their data stored elsewhere, and a thin sliver of software, cut down from an ‘application’ to an ‘app’, running on devices that they use, and that they nominally own, but that they do not control ( Clarke, 2011, 2017 ).

The functionality of most apps has become so limited that a large proportion of most devices’ functionality is unavailable when they have no Internet connection. This eventuality may be experienced only occasionally and briefly by city-dwellers, but frequently and for extended periods in remote, rural and even regional districts, and in mountainous and even merely hilly areas, in many countries. Many urban-living people in advanced economies currently lack both local knowledge and situational awareness and are entirely dependent on their handhelds for navigation. Similarly, event planning in both social and business contexts has become unfashionable, and meetings are negotiated in click-space. Hence, many people whose devices fail them are suddenly isolated and dysfunc- tional, resulting in psychological and perhaps economic risks associated with social and professional non-performance, but also physical risks associated with place.

This dependency is not the only security issue inherent in consumer computing devices. Desktops, laptops and hand- helds alike are subject to a very wide array of threats, includ- ing viruses, worms, trojans, cookies, web-bugs, ransomware and social engineering exploits such as phishing. Unfortu- nately, the devices also harbour a very wide array of vulner- abilities. Security has not been designed-in to either the hard- ware or the software, and many errors have been made in implementation, particularly of the software. The result is a great deal of unintended insecurity. Yet worse, suppliers have incorporated within the devices means whereby they and other organisations can gain access to data stored in them, and to some extent also to the functions that the devices

perform. Examples of these features include disclosure by de- vices of stored data including about identity and location, auto-updating of software, default-execution of software, and backdoor entry. Consumer devices are insecure both uninten- tionally and by design ( Clarke and Maurushat, 2007; Clarke, 2015 ). Further issues arise from the imposition of device iden- tifiers, such as each handset’s International Mobile Equipment Identity (IMEI) serial number, and the use of proxies such as the identifiers of chips embedded within devices, e.g. in Eth- ernet ports and telephone SIM-cards. One of the justifications for such measures is security. However, while some aspects of security might be served, other aspects of security, such as personal safety, may be seriously harmed.

The vast majority of employees of and contractors to or- ganisations possess such devices. Organisations have to a con- siderable extent taken up the opportunity to invite their staff to ‘ bring your own device ’ (BYOD) . This has been attractive to both parties, and hence organisations have been able to take advantage of cost-savings from the BYOD approach. The de- vices are not the only thing that staff have brought into the workplace. All of the insecurity, both unintended (such as mal- ware) and designed-in (such as remote access by third parties), comes into the organisation with them, as do the vulnerabili- ties to withdrawal of service and loss of data. Although moder- ately reliable protection mechanisms are available, such as es- tablishing protected enclaves within the device, and installing and using mobile device management (MDM) software, many organisations do not apply them, or apply them ineffectively. It remains to be seen how quickly suppliers will reduce other form-factors to the level of appliances. The idea is at- tractive not only to suppliers, but also to governments and government agencies, and to corporations that desire tight control over users (such as content providers and consumer marketers). As with handheld market segments, operating systems market share in the desktop and laptop arena is dom- inated by a duopoly, with Microsoft and Apple having 96% of the market at the beginning of 2018. Desktop and laptop op- erating environments are being migrated in the direction of those in handhelds, and a large proportion of users’ data is stored outside their control. Hence, all consumer devices and the functions that they are used for – including all of those used in organisational contexts through the now-mainstream BYOD approach – have a very high level of vulnerability to sup- plier manipulation and control.

Beyond the mainstream form-factors discussed above, there is a proliferation of computing and communications ca- pabilities embedded in other objects, including white-goods such as toasters and refrigerators, but also bus-stops and rubbish-bins. Drones are crossing the boundary from ex- pensive military devices to commercial tools and entertain- ment toys ( Clarke, 2014c ). In addition, very small devices have emerged – in such roles as environmental monitors, drone swarms and smart dust – and their populations might rapidly become vast. The economic rationale is that, where large numbers of devices are deployed, they are individually ex- pendable and hence can be manufactured very cheaply by avoiding excessive investment in quality, durability and secu- rity. Such ‘ eObjects ’ evidence a wide range of core and optional attributes ( Manwaring and Clarke, 2015 ). They are promoted as having important data collection roles to play in such con-

texts as smart homes and smart cities. Meanwhile, in 2018, motor vehicles bristle with apparatus that gathers data and sends it elsewhere, and the prospect of driverless cars looms large.

The software development landscape is very different in 2018 compared with 1985. The careful, engineering approach associated with the ‘waterfall’ development process was over- run from about 1990 onwards by various techniques that glo- rified the ‘quick and dirty’ approach. These used fashionable names such as Rapid Application Development and Agile De- velopment, and were associated with slogans such as ‘perma- nent beta’ and ‘move fast and break things’. With these mind- sets, requirements analysis is abbreviated, user involvement in design is curtailed, and quality is compromised; but the ap- parent cost of development is lower. In parallel, however, ‘de- velopment’ has increasingly given way to ‘integration’, with pre-written packages and libraries variously combined and adapted, as a means of further reducing cost, although nec- essarily at the expense of functionality-fit and reliability.

A final aspect that needs to be considered is a major change in the transparency of application software. Early-generation development tools – machine-language, assembler, procedu- ral and 4th generation (4G) languages – were reasonably trans- parent, in the sense that they embodied a model of a prob- lem and/or solution that was accessible and auditable, pro- vided that suitable technical expertise was available. Later- generation approaches, on the other hand, do not have that characteristic. Expert systems model a problem-domain, and an explanation of how the model applies to each new in- stance may or may not be available. Even greater challenges arise with the most highly abstracted development tech- niques, such as neural networks ( Clarke, 1991; Wigan and Clarke, 2013; Clarke, 2016a; Knight, 2017 ). Calls for “trans- parency, responsible engagement and … pro-social contribu- tions” are marginalised rather than mainstream ( Baxter and Sommerville, 2011 , quotation from Diaconescu and Pitt, 2017 , p.63).

4.1.2. Communications

At the level of transmission infrastructure , in many countries the network backbone or core was converted to fibre-optic ca- bles remarkably rapidly c. 1995–2005. This has coped very well with massively increased traffic volumes, although locations that are remote and thinly populated are both less well served and lack redundancy. The ‘tail-ends’, from Internet Service Providers (ISPs) to human users and other terminal devices have been, and remain, more problematical. For fixed-location devices, even in urban areas, many countries have regarded fibre-optic as too expensive for ‘last-mile’ installation, leav- ing lower-capacity twisted-pair copper and coaxial cable con- nections as the primary tail-end technologies. In less densely populated regional, rural and remote areas, cable-connection is less common, and instead fixed wireless and satellite links are used.

Within premises, Ethernet cable-connections remain, but Wifi has taken over much of the space, particularly in domes- tic and small business settings. Cellular networks offer what is in effect a wireless wide-area tail-end, and provide ‘back- haul’ connections to the Internet backbone. Traffic on these sub-networks is already very substantial, and if 5G promises

are delivered, it will grow much further. The diversity is very healthy, but the balancing of competition and innovation, on the one hand, against coherent and reliable management and stable services, on the other, becomes progressively more challenging.

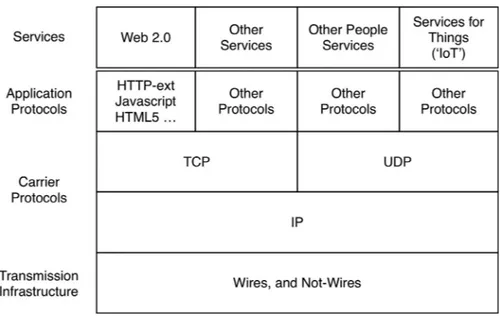

The Internet family of protocols utilises the transmis- sion infrastructure to carry messages that deliver services to end-points. The brief description here seeks to simplify, but to avoid the common error of blurring the distinctions be- tween devices and networks, and among applications, proto- cols and services. Fig. 1 offers a much-simplified depiction of the present arrangements. A wide range of services run over the established lower-layer protocols. The Web’s http protocol continues to dominate traffic involving people, although from c. 2005 onwards in the much-changed form popularly referred to as Web 2.0 ( Clarke, 2008a ). However, the proportion of cir- cumstances is increasing in which Internet communication involves no human being at either end, and instead the link is machine-to-machine (M2M).

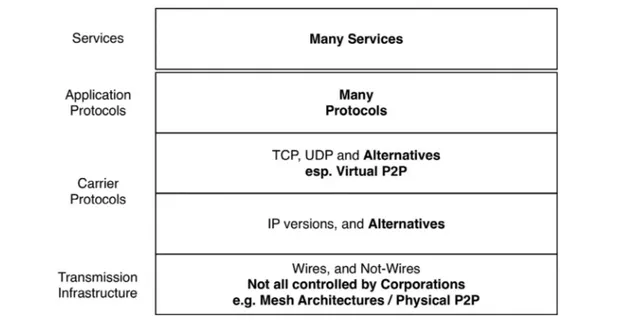

Fig. 2 endeavours to accommodate a number of changes that appear to be in train at present, with several additional in- frastructural patterns appearing likely to expand beyond their beachheads. Mesh networks at the physical level, and peer- to-peer networking at the logical level, in many cases oper- ate outside the control of corporations and government agen- cies. As the volume and diversity of M2M communications in- crease, alternatives to the intermediate-layer protocols may become significant. No serious alternative to the engine-room protocol, IP, is currently evident, however. The 35-year his- tory of the original version of the protocol (IPv4) continues unabated, with IPv6 having made only limited inroads since its launch c. 2000. Developments in cellular services and IoT, however, may soon lift it markedly beyond its current c. 5% share of traffic.

The openness of Internet architecture has created poten- tials that have been applied to many purposes. Some parties have perceived some of those applications as being harmful to their interests. These include large corporations, such as those

that achieve super profits from copyrighted works, and polit- ical regimes that place low value on political and social free- doms and focus instead on the protection of existing sources of political power. Oppressive governments and corporations seek to constrain performance and to impose device and user identification and tracking, in order to facilitate their manage- ment of human behaviour. Some have also sought to influence the engineering Standards that define the Internet’s architec- ture, and to exercise direct control over Internet infrastruc- ture, at least within their own jurisdictions.

4.1.3. Data

Many different technologies are needed to create digital data to represent the many relevant aspects of real-world phenom- ena and activities. By the end of the 20th century, most of those technologies already existed. The most challenging and hence slowest to mature were the capture of snapshots in three dimensions (3D), and the rendering of 3D objects, such as sculptures and industrial artefacts. Data formats are now mature, and interoperability among systems is reasonably re- liable. DBMS technologies are also mature, and the greatest challenges are now in achieving flexibility and adaptability as needs change.

In 2018, for documents, sound, images and video, ‘ born- digital’ is the default. Financial and social transactions result in auto-capture of data as a byproduct. In addition, a great deal of data about ‘the quantified self’ emanates from ‘well- ness devices’ and other forms of ‘wearables’ ( Lupton, 2016 ). In the B2C and government-to-citizen (G2C) segments, much of the effort and cost of text-data capture has been transferred from organisations to individuals, in the form of self-service, particularly through web-forms. In addition, as noted earlier, consumer devices are promiscuous and pass large amounts of data to device suppliers and a wide assortment of other or- ganisations.

These various threads of development have resulted in a massive increase in the intensity of data collection . That has been coupled with a switch from data destruction to data

Fig. 2 – Internetworking Post-2018.

retention, as a result of cheap bulk storage and hence lower costs to retain than to selectively and progressively delete. Data discovery has become well supported by centralised in- dexes and widely available search-capabilities. Data access and data disclosure have been facilitated by the negotiation and widespread adoption of formalised Standards for data formatting and transmission. These factors have combined to make organisations heavily data-dependent, with the re- sulting complex usefully referred to as the digital surveillance economy ( Clarke, 2018b ). This is discussed further below.

Governments also have a great deal of interest in acquir- ing data, and may apply many of the techniques used by consumer marketing corporations. During the preparation of this article, for example, debate raged about the scope for election campaigns to be manipulated by carefully-timed and carefully-framed messages targeted by means of psychome- tric profiles developed by marketing services organisations. Government agencies may have greater ability than corpora- tions to impose identifiers on individuals, and to force their use, e.g. as a condition of delivering services ( Clarke, 2006a ). The scope also exists for data to be collected from surveillance mechanisms such as closed-circuit TV (CCTV), particularly if coupled with effective facial recognition systems, and auto- mated number plate recognition (ANPR).

The imposition of biometric identification is creeping out from its original uses in the context of criminal investigation, and into visa-application, border-crossing, employment and even schools. Uses of data and identifiers in service delivery are being complemented by uses in denial of service. Tech- niques imposed on refugees in Afghanistan ( Farraj, 2010 ) may find their way into schemes in India, where the highly con- tentious Aadhar biometric scheme has been imposed on a bil- lion people ( Rao and Greenleaf, 2013 ), and the PRC, where a ‘social credit’ system has recently been deployed as a data- driven and semi-automated basis for social control ( Chen and Cheung, 2017; Needham, 2018 ).

4.1.4. Actuatortechnologies

SCADA traffic not only carries data about such vital services as water supply, energy generation and supply, and traffic control – in air, sea, rail and road contexts – but also instructions that control those operations. Since c. 2000, SCADA traffic has mi- grated from physical private networks onto the open Internet. The Internet’s inherent insecurity gives rise to the risks of data meddling and the compromise of control systems. Destructive interference has been documented in relation to, for example, sewage processing and industrial centrifuges used in nuclear enrichment; and governments as diverse as North Korea, the PRC, Russia, Israel and the USA have been accused of having and deploying considerable expertise in these areas.

Robotics was for many decades largely confined to facto- ries and warehouses, plus eternal, entertaining but ineffective and harmless exhibitions of humanoid devices purporting to be household workers and playthings. That may be changing, and it might do so quite rapidly. Drones already, and necessar- ily, operate with at least a limited degree of autonomy. Within- car IT has expanded well beyond fine-tuning the mix entering the cylinders of combustion engines. Robotics in public places is becoming familiar in the form of driverless car experiments, with early deployments emergent.

Another context in which the application of actuator tech- nologies has expanded is in ‘smart homes’. Garage doors op- erated from handsets nearby have been joined by remote con- trol over heating and cooling systems, ovens and curtains. In some homes, switching lights on and off, even from within the premises, is dependent on connectivity with, and appropriate functioning of, services somewhere in the cloud. Many ‘smart city’ projects also involve not just data collection but also ele- ments that perform actions in the real world.

The last frontier in the digitisation revolution has been 3D rendering. This involves building up layers of material in a manner similar to inking paper. In principle, this enables the manufacture of artefacts, or at least of components, or at the very least of prototypes. Longstanding computerised design

capabilities, in particular AutoCAD, may thereby be integrated with relatively inexpensive ‘additive manufacturing’ (AM) . As with all technological innovations, challenges abound ( Turney, 2018 ), but the impacts might in due course be highly disrup- tive in some sectors.

Brief, and necessarily incomplete though this review has been, it depicts a technological landscape that is vastly dif- ferent from that of 1985. Information infrastructure is now vastly more pervasive, and far more powerful, and involves a far greater degree of integration among its elements than was the case 30 years ago. It is far more tightly integrated with and far more intrusive into organisational operations and human behaviour, and its impacts and implications are far greater. 4.2. Psycho-socio-economic factors in 2018

This section identifies key aspects of the softer elements within the socio-technical arena. It uses the same structure as the description of the 1985 context, but presents the elements in a different sequence. During the early years of the open public Internet, there was a pervasive attitude that individual and social needs would be well served by the new infrastruc- ture. However, that early optimism quickly proved to be naive ( Clarke, 1999b,c; 2001b ). The primary drivers that are evident in 2018 are not individual needs, but rather the political and economic interests of powerful organisations. This section ac- cordingly first deals with the polities, then the economies, and only then returns to the individual, group, community and so- ciety aspects.

4.2.1. Polities

During the decade following CLSR’s launch, a ‘cyberspace ethos’ was evident ( Clarke, 2004a ), and considerable optimism existed about how the Internet would lead to more open gov- ernment. The most extreme claims were of a libertarian ‘elec- tronic frontier’, typified by John Perry Barlow’s ‘A Declaration of the Independence of Cyberspace’ ( Barlow, 1996 ).

On the other hand, the rich potential of information technology for authoritarian use was already apparent (e.g. Miller, 1972; Clarke, 1994b ). The notion of ‘surveillance so- ciety’ emerged in the years following CLSR’s foundation ( Weingarten, 1988; Lyon, 1994, 2001 ). The multiple technolo- gies discussed above have been moulded into infrastructure that enables the longstanding idea of an ‘information state’ ( Higgs, 2003 ) to be upgraded to the notion of the ‘surveillance state’ ( Balkin and Levinson, 2006; Harris, 2010 ). This entails in- tensive and more or less ubiquitous monitoring of individuals by government agencies.

During recent decades, governments have been increas- ingly presumptuous about what can be collected, analysed, re- tained, disclosed, re-used and re-analysed, and currently the ‘open data’ mantra is being used to outflank data protection laws. Further, in even the most ‘free’ nations, national security agencies have utilised the opportunity presented by terrorist activities to exploit IT in order to achieve substantial compro- mises to civil liberties. It is impossible to overlook the sym- bolism of John Perry Barlow finally becoming a full member of ‘The Grateful Dead’ while this article was being researched and written.

Technologies initially enabled improvements in the effi- ciency of surveillance, by augmenting physical surveillance of the person, and then enabling dataveillance of the dig- ital persona ( Clarke, 1988 ). However, the surveillance state and surveillance economy have moved far beyond data alone. The digitisation of human communications has been ac- companied by automated surveillance of all forms of mes- saging. More recently, the observation and video-recording of physical spaces has been developing into comprehensive behavioural surveillance of the individuals who operate in and pass through those spaces. Meanwhile, the migration of information-searching, reading and viewing from physical into electronic environments has enabled the surveillance of individuals’ experiences. The term ‘überveillance’ has been coined to encapsulate the omnipresence of surveillance, and the consolidation of data from multiple, increasingly intru- sive and even embedded forms of surveillance ( Michael and Michael, 2006, 2007; Clarke, 2007; Wigan, 2014a ).

Meanwhile, there are ongoing battles for control over the design of the Internet’s architecture, protocols and infras- tructural elements, and over operational aspects of the In- ternet. This has been attempted through such venues as the Internet Corporation for Assigned Names and Numbers’ Gov- ernment Advisory Committee (ICANN/GAC) and the Interna- tional Telecommunication Union (ITU), with the Internet Gov- ernance Forum (IGF) offered as a sop to social interests. To date, the Internet Engineering Task Force (IETF), provided with an organisational home by the Internet Society (ISOC), has re- mained in control of the engineering of protocols, and ICANN remains the hub for operational coordination.

Within individual countries, however, national govern- ments have interfered with the underlying telecommunica- tions architecture and infrastructure, and with the operations of Internet backbone operators and ISPs. Although repressive regimes are particularly active in these areas, governments in the nominally ‘free world’ are very active as well. Specific in- terventions into information architecture and infrastructure can be usefully divided into three categories:

• the embedment of features that facilitate surveillance. Commonly quoted examples include the US National Se- curity Agency (NSA) through AT&T ( Angwin et al., 2015 ), and the PRC through Huawei ( Cherry, 2005; Hsu, 2014 ). A complement to this is the authorisation of hacking activ- ities that would otherwise be illegal, such as surreptitious interference with the operation of devices not only of tar- geted individuals and organisations, but also of third par- ties, including intermediaries and the target’s associates; • the blockage of content flow that the regime does not want

to occur. The ‘Great Firewall of China’, with its strong focus on political content, is a leading example. However, various free-world governments have sought to block such con- tent as pornography (particularly involving children), in- citement to violence, traffic relating to fraudulent activi- ties (‘scams’), and various forms of aggressive behaviour (‘cyber-bullying’); and

• control over particular services, or over traffic manage- ment as a whole. A popular notion is that of a ‘kill switch’, such that entire services or even within-country access to the Internet can be compromised or blocked, at a time,

and for a period of time, of the regime’s choosing. Egypt and Turkey are frequently nominated as examples in this arena. In some free-world countries, related techniques are deployed to reduce network congestion when emergencies occur, and hence the scope exists for their use for other reasons.

Although the UK led developments in the very early years of computing technology, its industry was very quickly out- paced by the energy of US corporations. During the period c. 1950–2020, the US has led the world in most market segments of IT goods and services , with Europe a distant second. A cur- rent development that increases the likelihood of authoritar- ianism becoming embedded in information architecture and infrastructure is the emergence of the PRC as a major force in IT. It is already strongly represented in the strategically core element of backbone routers ( Cherry, 2005 ).

Many such measures are, however, challenging to make ef- fective, and most are subject to countermeasures. That has led to something of an ‘arms war’ . For example, the use of cryptography to mask traffic-content has given rise to gov- ernment endeavours to ban strong cryptography or require backdoor entrance for investigators. Similarly, obfuscation of the source and destination of traffic through the use of vir- tual private networks (VPNs), proxy-servers, and chains of proxy-servers (such as Tor) has stimulated bans and counter- countermeasures. All of these activities of course give rise to a wide array of IT law and security issues.

There is scope for political resistance , and possibly even for resilient democracy ( Clarke, 2016b ), e.g. through mesh net- works, and social media – channels that were much-used dur- ing the Arab Spring, c. 2010–2012 ( Agarwal et al., 2012 ). In all cases, it is vital that protections exist for activists’ iden- tities ( Clarke, 2008b ) and locations ( Clarke, 2001a; Clarke and Wigan, 2011 ). However, technologies to support resistance, such as privacy-enhancing technologies (PETs) have a dismal record ( Clarke, 2014a, 2016e ). The efforts of powerful and well- resourced government agencies, once they are focused on a particular quarry, appear unlikely to be withstood by individ- ual activists or even by suitably-advised advocacy organisa- tions.

Another aspect that represents a threat to democracy has been the adaptation of demagoguery from the eras of mass rallies and then of mass media, to the information era. This is in some respects despite, but in other ways perhaps be- cause of, the potentials that information infrastructure em- bodies ( Fuchs, 2018 ).

Perhaps a larger constraint on government repression than resistance by people may be the ongoing growth in the power of corporations, and the gradual maturation of government outsourcing, currently to a level referred to as ‘public-private partnerships’. In the military sector, activities that are increas- ingly being sub-contracted out include logistics, the installa- tion of infrastructure, the defence of infrastructure and per- sonnel, and interrogation – although perhaps not yet the ac- tual conduct of warfare. The pejorative term ‘mercenaries’ has been replaced by an expression that is presumably intended to give the role a positive gloss: ‘private military corporations’. The relationship between the public and private sectors could be moving towards what Schmidt and Cohen (2014) enthusias-

tically proposed: a high-tech, corporatised-government State . The organisational capacity of many governments to exercise control over a country’s inhabitants may wane, even as the technical capacity to do so rises to a crescendo. However, cor- porations’ interests and capabilities may take up where gov- ernments leave off.

4.2.2. Economies

Moore’s Law on the growth of transistor density and hence processor power may drive developments in some computationally-intensive applications for a few years yet, but it has become less relevant to most other uses of IT. Other factors that have been of much greater significance in the scal- ing up of IT’s impacts include the considerable reductions in the costs of devices, their diversity and improved fit to dif- ferent contexts of use, substantial increases in the capacity to collect, to analyse, to store, and to apply data, greatly in- creased connectivity, and the resulting pervasiveness of con- nected devices and embedment of data-intensive IT applica- tions in both economic and social activities. This sub-section deals with a number of key developments, beginning with mi- cro considerations and moving on towards macro factors.

Disruptive technologies have wrought change in a wide va- riety of service industries, both informational and physical in nature. Currently, some segments of secondary industry are also facing the possibility of turmoil due to the emergence of additive manufacturing technologies. Initiatives such as MakerSpaces are exposing people to technologies that appear to have the capacity to lower significantly the cost of entry, enable mass-customisation, and spawn regionalised micro- manufacturing industries ( Wigan, 2014b ).

During the 1990s, it was widely anticipated that the market reach provided by the Internet would tend to result in disin- termediation, and that existing and intending new interme- diaries would need to be fleet of foot to sustain themselves ( McCubbrey, 1999; Chircu and Kauffman, 1999 ). In fact, the complexity and diversity that have come with virtualisation have seen an increase in outsourcing and hence more, larger and more powerful intermediaries .

The virtualisation of both servers and organisations has led to ‘cloudsourcing’, whereby devices within the organisa- tion are largely limited to the capabilities necessary to reach remote services ( Clarke, 2010a, 2010b ). Organisations have gained convenience, and to some extent cost reductions, in return for substantial dependence on complex information infrastructure and a flotilla of service-providers, many un- known to and out of reach of the enterprise itself. This has given rise to considerable risks to the organisation and its data ( Clarke, 2013b ). At consumer level, a rapid switch occurred from the application-on-own-device model, which held sway 1975–2005, to dependence on remote services, which has dom- inated since c. 2010 ( Clarke, 2011 ).

As the term ‘the information economy’ indicated ( Drucker, 1968; Porat, 1977; Tapscott, 1996 ), there are many industries in which data, in its basic, specialised and derivative forms, is enormously valuable to corporations. This is evident with software and data, with assemblies of data, and even with organic chemistry in such contexts as pharmaceutical prod- ucts, plant breeder rights and the human genome. There are no ‘property rights in data’, but there are many kinds of ‘rights