Correction

PSYCHOLOGICAL AND COGNITIVE SCIENCES

Correction for“Detecting affiliation in colaughter across 24 so-cieties,” by Gregory A. Bryant, Daniel M. T. Fessler, Riccardo Fusaroli, Edward Clint, Lene Aarøe, Coren L. Apicella, Michael Bang Petersen, Shaneikiah T. Bickham, Alexander Bolyanatz, Brenda Chavez, Delphine De Smet, Cinthya Díaz, Jana Fanˇcoviˇcová, Michal Fux, Paulina Giraldo-Perez, Anning Hu, Shanmukh V. Kamble, Tatsuya Kameda, Norman P. Li, Francesca R. Luberti, Pavol Prokop, Katinka Quintelier, Brooke A. Scelza, Hyun Jung Shin, Montserrat Soler, Stefan Stieger, Wataru Toyokawa, Ellis A. van den Hende, Hugo Viciana-Asensio, Saliha Elif Yildizhan, Jose C. Yong, Tessa Yuditha, and Yi Zhou, which appeared in issue 17, April 26, 2016, ofProc Natl Acad Sci USA (113:4682–4687; first published April 11, 2016; 10.1073/pnas.1524993113).

The authors note that the affiliation for Saliha Elif Yildizhan should instead appear as Department of Molecular Biology and Genetics, Uludag University, Bursa 16059, Turkey. The corrected author and affiliation lines appear below. The online version has been corrected.

Gregory A. Bryanta,b,1, Daniel M. T. Fesslerb,c, Riccardo

Fusarolid,e, Edward Clintb,c, Lene Aarøef, Coren L. Apicellag, Michael Bang Petersenh, Shaneikiah T. Bickhami,

Alexander Bolyanatzj, Brenda Chavezk, Delphine De Smetl, Cinthya Díazk, Jana Fanˇcoviˇcovám, Michal Fuxn, Paulina Giraldo-Perezo, Anning Hup, Shanmukh V. Kambleq,

Tatsuya Kamedar, Norman P. Lis, Francesca R. Lubertib,c, Pavol Prokopm,t, Katinka Quintelieru, Brooke A. Scelzab,c,

Hyun Jung Shinv, Montserrat Solerw, Stefan Stiegerx,y, Wataru Toyokawaz, Ellis A. van den Hendeaa, Hugo

Viciana-Asensiobb, Saliha Elif Yildizhancc, Jose C. Yongs, Tessa Yudithadd, and Yi Zhoup

aDepartment of Communication Studies, University of California, Los Angeles, CA 90095;bUCLA Center for Behavior, Evolution, and Culture, University of California, Los Angeles, CA 90095;cDepartment of Anthropology, University of California, Los Angeles, CA 90095; dInteracting Minds Center, Aarhus University, 8000 Aarhus C, Denmark; eCenter for Semiotics, Aarhus University, 8000 Aarhus C, Denmark; fDepartment of Political Science and Government, Aarhus University, 8000 Aarhus C, Denmark;gDepartment of Psychology, University of Pennsylvania, Philadelphia, PA 19104;hDepartment of Political Science and Government, Aarhus University, 8000 Aarhus C, Denmark;

iIndependent Scholar;jDepartment of Anthropology, College of DuPage, Glen Ellyn, IL 60137;kDepartment of Psychology, Pontificia Universidad Catolica del Peru, San Miguel Lima, Lima 32, Peru;lDepartment of Interdisciplinary Study of Law, Private Law and Business Law, Ghent University, 9000 Ghent, Belgium;mDepartment of Biology, University of Trnava, 918 43 Trnava, Slovakia;nDepartment of Biblical and Ancient Studies, University of South Africa, Pretoria 0002, South Africa; oDepartment of Biology, University of Auckland, Aukland 1142, New Zealand;pDepartment of Sociology, Fudan University, Shanghai 200433, China;qDepartment of Psychology, Karnatak University Dharwad, Karnataka 580003, India;rDepartment of Social Psychology, University of Tokyo, 7 Chome-3-1 Hongo, Tokyo, Japan;sDepartment of Psychology, SingaporeManagement University, 188065 Singapore;tInstitute of Zoology, Slovak Academy of Sciences, 845 06 Bratislava, Slovakia; uInternational Strategy & Marketing Section, University of Amsterdam, 1012 Amsterdam, The Netherlands;vDepartment of Psychology, Pusan National University, Pusan 609-735, Korea;wDepartment of Anthropology, Montclair State University, Montclair, NJ 07043;xDepartment of Psychology, University of Konstanz, 78464 Konstanz, Germany; yDepartment of Psychology, University of Vienna, 1010 Vienna, Austria; zDepartment of Behavioral Science, Hokkaido University, Sapporo, Hokkaido Prefecture, 5 Chome-8 Kita Jonshi, Japan;aaDepartment of Product Innovation and Management, Delft University of Technology, 2628 Delft, The Netherlands;bbDepartment of Philosophy, Université Paris 1 Panthéon-Sorbonne, 75005 Paris, France;ccDepartment of Molecular Biology and Genetics, Uludag University, Bursa 16059, Turkey; andddJakarta Field Station, Max Planck Institute for Evolutionary Anthropology, Jakarta 12930, Indonesia

www.pnas.org/cgi/doi/10.1073/pnas.1606204113

www.pnas.org PNAS | May 24, 2016 | vol. 113 | no. 21 | E3051

CORR

ECTION

Detecting affiliation in colaughter across 24 societies

Gregory A. Bryanta,b,1, Daniel M. T. Fesslerb,c, Riccardo Fusarolid,e, Edward Clintb,c, Lene Aarøef, Coren L. Apicellag, Michael Bang Petersenh, Shaneikiah T. Bickhami, Alexander Bolyanatzj, Brenda Chavezk, Delphine De Smetl,Cinthya Díazk, Jana Fanˇcoviˇcovám, Michal Fuxn, Paulina Giraldo-Perezo, Anning Hup, Shanmukh V. Kambleq, Tatsuya Kamedar, Norman P. Lis, Francesca R. Lubertib,c, Pavol Prokopm,t, Katinka Quintelieru, Brooke A. Scelzab,c, Hyun Jung Shinv, Montserrat Solerw, Stefan Stiegerx,y, Wataru Toyokawaz, Ellis A. van den Hendeaa,

Hugo Viciana-Asensiobb, Saliha Elif Yildizhancc, Jose C. Yongs, Tessa Yudithadd, and Yi Zhoup

aDepartment of Communication Studies, University of California, Los Angeles, CA 90095;bUCLA Center for Behavior, Evolution, and Culture, University of California, Los Angeles, CA 90095;cDepartment of Anthropology, University of California, Los Angeles, CA 90095;dInteracting Minds Center, Aarhus University, 8000 Aarhus C, Denmark;eCenter for Semiotics, Aarhus University, 8000 Aarhus C, Denmark;fDepartment of Political Science and Government, Aarhus University, 8000 Aarhus C, Denmark;gDepartment of Psychology, University of Pennsylvania, Philadelphia, PA 19104;hDepartment of Political Science and Government, Aarhus University, 8000 Aarhus C, Denmark;iIndependent Scholar;jDepartment of Anthropology, College of DuPage, Glen Ellyn, IL 60137;kDepartment of Psychology, Pontificia Universidad Catolica del Peru, San Miguel Lima, Lima 32, Peru;lDepartment of Interdisciplinary Study of Law, Private Law and Business Law, Ghent University, 9000 Ghent, Belgium;mDepartment of Biology, University of Trnava, 918 43 Trnava, Slovakia;nDepartment of Biblical and Ancient Studies, University of South Africa, Pretoria 0002, South Africa;oDepartment of Biology, University of Auckland, Aukland 1142, New Zealand;pDepartment of Sociology, Fudan University, Shanghai 200433, China;qDepartment of Psychology, Karnatak University Dharwad, Karnataka 580003, India;rDepartment of Social Psychology, University of Tokyo, 7 Chome-3-1 Hongo, Tokyo, Japan;sDepartment of Psychology, Singapore Management University, 188065 Singapore;tInstitute of Zoology, Slovak Academy of Sciences, 845 06 Bratislava, Slovakia;uInternational Strategy & Marketing Section, University of Amsterdam, 1012 Amsterdam, The Netherlands;vDepartment of Psychology, Pusan National University, Pusan 609-735, Korea;wDepartment of Anthropology, Montclair State University, Montclair, NJ 07043;xDepartment of Psychology, University of Konstanz, 78464 Konstanz, Germany;yDepartment of Psychology, University of Vienna, 1010 Vienna, Austria;zDepartment of Behavioral Science, Hokkaido University, Sapporo, Hokkaido Prefecture, 5 Chome-8 Kita Jonshi, Japan;aaDepartment of Product Innovation and Management, Delft University of Technology, 2628 Delft, The Netherlands;bbDepartment of Philosophy, Université Paris 1 Panthéon-Sorbonne, 75005 Paris, France;ccDepartment of Molecular Biology and Genetics, Uludag University, Bursa 16059, Turkey; andddJakarta Field Station, Max Planck Institute for Evolutionary Anthropology, Jakarta 12930, Indonesia

Edited by Susan T. Fiske, Princeton University, Princeton, NJ, and approved March 9, 2016 (received for review December 18, 2015) Laughter is a nonverbal vocal expression that often communicates

positive affect and cooperative intent in humans. Temporally coincident laughter occurring within groups is a potentially rich cue of affiliation to overhearers. We examined listeners’ judgments of affiliation based on brief, decontextualized instances of colaughter between either estab-lished friends or recently acquainted strangers. In a sample of 966 participants from 24 societies, people reliably distinguished friends from strangers with an accuracy of 53–67%. Acoustic analyses of the individual laughter segments revealed that, across cultures, lis-teners’ judgments were consistently predicted by voicing dynamics, suggesting perceptual sensitivity to emotionally triggered spontane-ous production. Colaughter affords rapid and accurate appraisals of affiliation that transcend cultural and linguistic boundaries, and may constitute a universal means of signaling cooperative relationships. laughter

|

cooperation|

cross-cultural|

signaling|

vocalizationH

umans exhibit extensive cooperation between unrelated in-dividuals, managed behaviorally by a suite of elaborate communication systems. Social coordination relies heavily on language, but nonverbal behaviors also play a crucial role in forming and maintaining cooperative relationships (1). Laughter is a common nonverbal vocalization that universally manifests across a broad range of contexts, and is often associated with prosocial intent and positive emotions (2–5), although it can also be used in a threatening or aggressive manner (2). That laughter is inherently social is evident in the fact that people are up to 30 times more likely to laugh in social contexts than when alone (6). Despite the ubiquity and similarity of laughter across all cultures, its communicative functions remain largely unknown. Colaughter is simultaneous laughter between individuals in social interactions, and occurs with varying frequency as a function of the sex and relationship composition of the group: friends laugh together more than strangers, and groups of female friends tend to laugh more than groups of male friends or mixed-sex groups (7, 8). Colaughter can indicate interest in mating contexts (9), especially if it is synchronized (10), and is a potent stimulus for further laughter (i.e., it is contagious) (11). Researchers have focused on laughter within groups, but colaughter potentially provides rich social information to those outside of the group. Against this backdrop, we examined (i) whether listeners around the world can determine the degree of social closeness and familiarity betweenpairs of people solely on the basis of very brief decontextualized recordings of colaughter, and (ii) which acoustic features in the laughs might influence such judgments.

Laughter is characterized by neuromechanical oscillations in-volving rhythmic laryngeal and superlaryngeal activity (12, 13). It often features a series of bursts or calls, collectively referred to as bouts. Laugh acoustics vary dramatically both between and within speakers across bouts (14), but laughter appears to follow a variety of production rules (15). Comparative acoustic analyses examining play vocalizations across several primate species sug-gest that human laughter is derived from a homolog dating back at least 20 Mya (16, 17). Humans evolved species-specific sound

Significance

Human cooperation requires reliable communication about social intentions and alliances. Although laughter is a phylogenetically conserved vocalization linked to affiliative behavior in non-human primates, its functions in modern non-humans are not well understood. We show that judges all around the world, hearing only brief instances of colaughter produced by pairs of American English speakers in real conversations, are able to reliably iden-tify friends and strangers. Participants’ judgments of friendship status were linked to acoustic features of laughs known to be associated with spontaneous production and high arousal. These findings strongly suggest that colaughter is universally perceiv-able as a reliperceiv-able indicator of relationship quality, and contribute to our understanding of how nonverbal communicative behavior might have facilitated the evolution of cooperation.

Author contributions: G.A.B. designed research; G.A.B., R.F., E.C., L.A., C.L.A., M.B.P., S.T.B., A.B., B.C., D.D.S., C.D., J.F., M.F., P.G.-P., A.H., S.V.K., T.K., N.P.L., F.R.L., P.P., K.Q., B.A.S., H.J.S., M.S., S.S., W.T., E.A.v.d.H., H.V.-A., S.E.Y., J.C.Y., T.Y., and Y.Z. performed research; G.A.B. and R.F. analyzed data; G.A.B., D.M.T.F., and R.F. wrote the paper; D.M.T.F. conceived and orga-nized the cross-cultural component; and E.C. coordinated cross-cultural researchers. The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: Experimental response data from all study sites and acoustic data from all laugh stimuli are available athttps://escholarship.org/uc/item/99j8r0gx.

1To whom correspondence should be addressed. Email: gabryant@ucla.edu.

This article contains supporting information online atwww.pnas.org/lookup/suppl/doi:10.

features in laughs involving higher proportions of periodic com-ponents (i.e., increasingly voiced), and a predominantly egressive airflow. This pattern is different from laugh-like vocalizations of our closest nonhuman relative, Pan troglodytes, which incorporate alternating airflow and mostly noisy, aperiodic structure (2, 16). In humans, relatively greater voicing in laughs is judged to be more emotionally positive than unvoiced laughs (18), as is greater variability in pitch and loudness (19). People produce different perceivable laugh types [e.g., spontaneous (or Duchenne) versus volitional (or non-Duchenne)] that correspond to different com-municative functions and underlying vocal production systems (3, 20–22), with spontaneous laughter produced by an emotional vocal system shared by many mammals (23, 24). Recent evidence suggests that spontaneous laughter is often associated with rela-tively greater arousal in production (e.g., increased pitch and loudness) than volitional laughter, and contains relatively more features in common with nonhuman animal vocalizations (20) (Audios S1–S6). These acoustic differences might be important for making social judgments if the presence of spontaneous (i.e., genuine) laughter predicts cooperative social affiliation, but the presence of volitional laughter does not.

All perceptual studies to date have examined individual laughs, but laughter typically occurs in social groups, often with multiple simultaneous laughers. Both because social dynamics can change rapidly and because newcomers will often need to quickly assess the membership and boundaries of coalitions, listeners frequently must make rapid judgments about the affiliative status obtaining within small groups of interacting individuals; laughter may pro-vide an efficient and reliable cue of affiliation. If so, we should expect humans to exhibit perceptual adaptations sensitive to colaughter dynamics between speakers. However, to date no study has examined listeners’ judgments of the degree of affiliation between laughers engaged in spontaneous social interactions.

We conducted a cross-cultural study across 24 societies (Fig. 1) examining listeners’ judgments of colaughter produced by Amer-ican English-speaking dyads composed either of friends or newly acquainted strangers, with listeners hearing only extremely brief decontextualized recordings of colaughter. This“thin slice” ap-proach is useful because listeners receive no extraneous in-formation that could inform their judgments, and success with such limited information indicates particular sensitivity to the stimulus (25). A broadly cross-cultural sample is important if we are to demonstrate the independence of this perceptual sensi-tivity from the influences of language and culture (26). Although cultural factors likely shape pragmatic considerations driving human laughter behavior, we expect that many aspects of this phylogenetically ancient behavior will transcend cultural differ-ences between disparate societies.

Results

Judgment Task.We used a model comparison approach in which variables were entered into generalized linear mixed models and

effects on model fit were measured using Akaike Information Criterion (for all model comparisons, seeSI Appendix, Tables S1

and S2). This approach allows researchers to assess which

combination of variables best fit the pattern of data without comparison with a null model. The data were modeled using the glmer procedure of the lme4 package (27) in the statistical platform R (v3.1.1) (28). Our dependent measures consisted of two questions: one forced-choice item and one rating scale. For question 1 (Do you think these people laughing were friends or strangers?) data were modeled using a binomial (logistic) link function, with judgment accuracy (hit rate) as a binary outcome (1= correct; 0 = incorrect). For question 2 (How much do you think these people liked each other?), we used a Gaussian link function with rating response (1–7) as a continuous function.

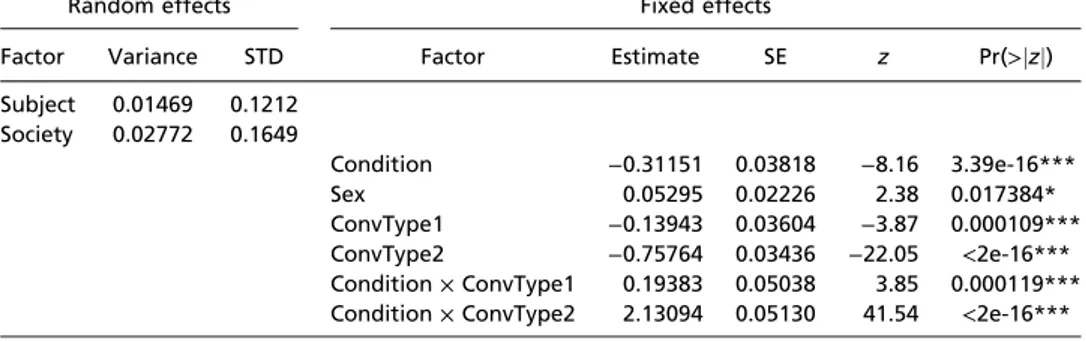

Across all participants, the overall rate of correct judgments in the forced-choice measure (friends or strangers) was 61% (SD= 0.49), a performance significantly better than chance (z= 40.5, P< 0.0001) (Fig. 2 andSI Appendix, Table S3). The best-fitting model was a generalized linear mixed model by the Laplace approximation, with participant sex as a fixed effect, familiarity and dyad type as interacting fixed effects, participant and study site as random effects, and hit rate (percent correct) as the de-pendent measure (Table 1). Participants (VAR = 0.014; SD = 0.12) and study site (VAR= 0.028; SD = 0.17) accounted for very little variance in accuracy in the forced-choice measure. Famil-iarity interacted with dyad type, with female friends being rec-ognized at higher rates than male friends (z= 42.96, P < 0.001), whereas male strangers were recognized at higher rates than female strangers (z= −22.57, P < 0.0001). A second significant Fig. 1. Map of the 24 study site locations.

Fig. 2. Rates of correct judgments (hits) in each study site broken down by experimental condition (friends or strangers), and dyad type (male–male, male–female, female–female). Chance performance represented by 0.50. For example, the bottom right graph of the United States results shows that female–female friendship dyads were correctly identified 95% of the time, but female–female stranger dyads were identified less than 50% of the time. Male–male and mixed-sex friendship dyads were identified at higher rates than male–male and mixed-sex stranger dyads. This contrasts with Korea, for example, where male–male and mixed-sex friendship dyads were identified at lower rates than male–male and mixed-sex stranger dyads. In every so-ciety, female–female friendship dyads were identified at higher rates than all of the other categories.

Bryant et al. PNAS | April 26, 2016 | vol. 113 | no. 17 | 4683

PSYCHOL OGICAL AND COGN ITIVE SC IENCES

interaction indicates that mixed-sex friends were recognized at higher rates than male friends, and mixed-sex strangers were recognized at lower rates than male strangers (z = 4.42, P < 0.001). For the second question (i.e., “How much do you think these people liked each other?”) the same model structure was the best fit, with a similar pattern of results (SI Appendix, Fig. S1 and Table S4).

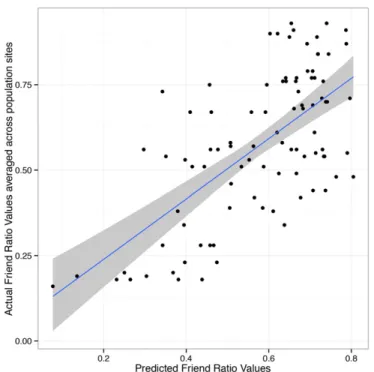

Overall, female friends were identified at the highest rate in every society without exception, but there was also a universal tendency to judge female colaughers as friends (SI Appendix, Fig. S2). Forced-choice responses for each colaughter trial were col-lapsed across societies and compared across dyad types, revealing that a response bias to answer “friends” existed in judgments of female dyads (70%), F(2, 47) = 7.25, P = 0.002, but not male (46%) or mixed-sex dyads (49%), which did not differ from one another (LSD test, P = 0.73). Additionally, female participants (M= 0.62; SD = 0.49) had slightly greater accuracy than male participants (M= 0.60; SD = 0.49) overall (z = 2.31, P < 0.05). Acoustic Analysis.Acoustic features, including the frequency and temporal dynamics of voiced and unvoiced segments, were au-tomatically extracted from the individual laugh segments and used to statistically reconstruct the rate at which participants judged each colaugh segment as a friendship dyad. We used an ElasticNet process (29) to individuate key features to assess in a multiple linear regression and a fivefold cross-validated multiple linear regression to estimate coefficients of the selected features, repeating the process 100 times to ensure stability of the results (Table 2). The resulting model was able to reliably predict par-ticipants’ judgments that colaughers were friends, adjusted R2=

0.43 [confidence interval (CI) 0.42–0.43], P = 0.0001 (Fig. 3). Across cultures, laughs that had shorter call duration, less regular pitch and intensity cycles, together with less variation in pitch cycle regularity were more likely to be judged to be between friends (for complete details of acoustic analysis, seeSI Appendix).

Discussion

Across all societies, listeners were able to distinguish pairs of colaughers who were friends from those who were strangers that

had just met. Biases, presumably reflecting panhuman patterns in the occurrence of laughter in everyday life, existed in all so-cieties sampled as well, such that participants were more likely to assume that female colaughers were friends than strangers. Male strangers were also recognized universally at significantly high rates, and participants worldwide rated the members of these dyads as liking each other the least among all pairs. Dynamic acoustic information in the laughter predicted the accuracy of judgments, strongly suggesting that participants attended closely to these sound features, likely outside of conscious awareness. The judgment pattern was remarkably similar across disparate societies, including those with essentially no familiarity with English, the language of the target individuals whose laughter was evaluated. These results constitute strong preliminary evidence that colaughter provides a reliable cue with which overhearers (and, presumably, colaughers themselves) can assess the degree of affiliation between interactants. Although embedded within discourse, laughter is nonverbal in nature and presents univer-sally interpretable features, presumably reflecting phylogenetic antiquity predating the evolution of language.

Together with auxiliary experiments on the laugh stimuli (SI Appendix), acoustic data strongly suggest that individual laugh characteristics provided much of the information, allowing our participants to accurately differentiate between friends and strangers. Laugh features predicting listeners’ friend responses included shorter call duration, associated with judgments of friend-liness (18) and spontaneity (20), as well as greater pitch and loudness irregularities, associated with speaker arousal (30). Acoustic analyses comparing laughs within a given copair did not indicate any contingent dynamic relationship that could plausibly correspond to percepts of entrainment or coordination one might expect from familiar interlocutors. Indeed, our colaugh audio clips may be too short to capture shared temporal dynamics that longer recordings might reveal. A second group of United States listeners evaluated artificial colaughter pairs constructed by shuffling the individual laugh clips within dyad categories (SI Appendix). Consonant with the conclusion that our main result was driven by features of the individual laughs rather than in-teractions between them, these artificial copairs were judged Table 1. Best-fit model for question 1 (Do you think these people laughing were friends or

strangers?)

Random effects Fixed effects

Factor Variance STD Factor Estimate SE z Pr(>jzj)

Subject 0.01469 0.1212 Society 0.02772 0.1649 Condition −0.31151 0.03818 −8.16 3.39e-16*** Sex 0.05295 0.02226 2.38 0.017384* ConvType1 −0.13943 0.03604 −3.87 0.000109*** ConvType2 −0.75764 0.03436 −22.05 <2e-16*** Condition× ConvType1 0.19383 0.05038 3.85 0.000119*** Condition× ConvType2 2.13094 0.05130 41.54 <2e-16*** *P< 0.05; ***P < 0.001.

Table 2. Sample coefficients from one run of the fivefold cross-validated model for friend ratio across 24 societies

Predictor β- (SE) fold 1 β- (SE) fold 2 β- (SE) fold 3 β- (SE) fold 4 β- (SE) fold 5 Intercept 0.611 (0.177) 0.578 (0.114) 0.547 (0.114) 0.566 (0.125) 0.594 (0.104) Jitter mean 1.720 (0.345) 1.652 (0.306) 1.648 (0.328) 1.545 (0.335) 1.720 (0.300) Jitter SD −1.826 (0.325) −1.797 (0.305) −1.747 (0.302) −1.697 (0.338) −1.9 (0.297) Fifth percentile shimmer 0.280 (0.199) 0.290 (0.131) 0.315 (0.127) 0.324 (0.146) 0.302 (0.119) Mean call duration −0.387 (0.075) −0.358 (0.08) −0.37 (0.084) −0.412 (0.09) −0.385 (0.07)

quite similarly to the original copairs in the main study. Finally, a third group of United States listeners rated the individual laughs on the affective dimensions of arousal and valence; these judg-ments were positively associated with the likelihood that, in its

colaughter context, a given laugh was judged in the main study as having occurred in a friendship dyad.

Inclusion in cooperative groups of allied individuals is often a key determinant of social and material success; at the same time, social relationships are dynamic, and can change over short time spans. As a consequence, in our species’ ancestral past, individ-uals who could accurately assess the current degree of affiliation between others stood to gain substantial fitness benefits. Closely allied individuals often constitute formidable opponents; simi-larly, such groups may provide substantial benefits to newcomers who are able to gain entry. Many social primates exhibit these political dynamics, along with corresponding cognitive abilities (31); by virtue of the importance of cooperation in human social and economic activities, ours is arguably the political species par excellence. However, even as language and cultural evolution have provided avenues for evolutionarily unprecedented levels of cooperation and political complexity in humans, we continue to use vocal signals of affiliation that apparently predate these in-novations. As noted earlier, human laughter likely evolved from labored breathing during play of the sort exhibited by our closest living relatives, a behavior that appears to provide a detectable cue of affiliation among extant nonhuman primates. The capa-bility for speech affords vocal mimicry in humans, and as such, the ability to generate volitional emulations of cues that ordi-narily require emotional triggers. In turn, because of the im-portance of distinguishing cues indicative of deeply motivated affiliation from vocalizations that are not contingent on such motives, producers’ vocal mimicry of laughter will have favored the evolution of listeners’ ability to discriminate between genuine and volitional tokens. However, the emergence of such dis-criminative ability will not have precluded the utility of the production of volitional tokens, as these could then become nor-mative utterances prescribed in the service of lubricating mini-mally cooperative interactions; that is,“polite laughter” emerges. Fig. 3. Acoustic-based model predictions of friend ratio (defined as the

overall likelihood of each single laugh being part of a colaugh segment produced between individuals identified by participants as being friends) (on the x axis) with the actual values (on the y axis) (95% CI).

Fig. 4. Six sample waveforms and narrowband FFT spectrograms (35-ms Gaussian analysis window, 44.1-kHz sampling rate, 0- to 5-kHz frequency range, 100-to 600-Hz F0range) of colaughter from each experimental condition (friends and strangers), and dyad type (male–male, male–female, female–female). For each colaugh recording, the Top and Middle show the waveforms from each of the constituent laughs, and the spectrogram collapses across channels. Blue lines represent F0contours. The recordings depicted here exemplify stimuli that were accurately identified by participants. Averaging across all 24 societies, the accuracy (hit rate) for the depicted recordings were: female–female friendship, 85%; mixed-sex friendship, 75%; male–male friendship, 78%; female– female strangers, 67%; mixed-sex strangers, 82%; male–male strangers, 73%.

Bryant et al. PNAS | April 26, 2016 | vol. 113 | no. 17 | 4685

PSYCHOL OGICAL AND COGN ITIVE SC IENCES

Laughter and speech have thus coevolved into a highly interactive and flexible vocal production ensemble involving strategic ma-nipulation and mindreading among social agents.

This finding opens up a host of evolutionary questions con-cerning laughter. Can affiliative laughter be simulated effec-tively, or is it an unfakeable signal? Hangers-on might do well to deceptively signal to overhearers that they are allied with a powerful coalition, whereas others would benefit from detecting such deception. If the signal is indeed honest, what keeps it so? Does the signal derive from the relationship itself (i.e., can un-familiar individuals allied because of expedience signal their af-filiation through laughter) or, consonant with the importance of coordination in cooperation, is intimate knowledge of the other party a prerequisite? Paralleling such issues, at the proximate level, numerous questions remain. For example, given universal biases that apparently reflect prior beliefs, future studies should both explore listeners’ accuracy in judging the sex of colaughers and examine the sources of such biases. Our finding that colaughter constitutes a panhuman cue of affiliation status is thus but the tip of the iceberg when it comes to understanding this ubiquitous yet understudied phenomenon.

Methods

All study protocols were approved by the University of California, Los Angeles Institutional Review Board. At all study sites, informed consent was obtained verbally before participation in the experiment. An informed consent form was signed by all participants providing voice recordings for laughter stimuli. Stimuli. All colaughter segments were extracted from conversation record-ings, originally collected for a project unrelated to the current study, made in 2003 at the Fox Tree laboratory at the University of California, Santa Cruz. The recorded conversations were between pairs of American English-speaking undergraduate students who volunteered to participate in exchange for course credit for an introductory class in psychology. Two rounds of re-cruitment were held. In one, participants were asked to sign up with a friend whom they had known for any amount of time. In the other, participants were asked to sign up as individuals, where after they were paired with a stranger. The participants were instructed to talk about any topic of their choosing;“bad roommate experiences” was given as an example of a pos-sible topic. The average length of the conversations from which the stimuli used in this study were extracted was 13.5 min (mean length± SD = 809.2 s ± 151.3 s). Interlocutors were recorded on separate audio channels using clip-on lapel microphclip-ones (Sclip-ony ECM-77B) placed∼15 cm from the mouth, and recorded to DAT (16-bit amplitude resolution, 44.1-kHz sampling rate, uncompressed wav files, Sony DTC series recorder). For more description of the conversations, see ref. 32.

Colaughter Segments. Forty-eight colaughter segments were extracted from 24 conversations (two from each), half from conversations between estab-lished friends (mean length of acquaintance= 20.5 mo; range = 4–54 mo; mean age± SD = 18.6 ± 0.6) and half from conversations between strangers who had just met (mean age± SD = 19.3 ± 1.8). Colaughter was defined as the simultaneous vocal production (intensity onsets within 1 s), in two speakers, of a nonverbal, egressive, burst series (or single burst), either

voiced (periodic) or unvoiced (aperiodic). Laughter is acoustically variable, but often stereotyped in form, characterized typically by an initial alerting component, a stable vowel configuration, and a decay function (2, 13, 14). In the colaughter segments selected for use, no individual laugh contained verbal content or other noises of any kind. To prevent a selection bias in stimulus creation, for all conversations, only two colaughter sequences were used: namely, the first to appear in the conversation and the last to appear in the conversation. If a colaughter sequence identified using this rule contained speech or other noises, the next qualifying occurrence was chosen. The length of colaughter segments (in milliseconds) between friends (mean length± SD= 1,146 ± 455) and strangers (mean length ± SD = 1,067 ± 266) was similar, t(46)= 0.74, P = 0.47. Laughter onset asynchrony (in milliseconds) was also similar between friends (mean length ± SD = 337 ± 299) and strangers (mean length± SD = 290 ± 209), t(46) = −0.64, P = 0.53. Previous studies have documented that the frequency of colaughter varies as a function of the gender composition of the dyad or group (6, 7). The same was true in the source conversations used here, with female friends pro-ducing colaughter at the highest frequency, followed by mixed-sex groups, and then all-male groups. Consequently, our stimulus set had uneven ab-solute numbers of different dyad types, reflecting the actual occurrence frequency in the sample population. Of the 24 sampled conversations, 10 pairs were female dyads, 8 pairs were mixed-sex dyads, and 6 pairs were male dyads. For each of these sex-pair combinations, half were friends and half were strangers. Sample audio files for each type of dyad and familiarity category are presented inAudios S7–S12; these recordings are depicted vi-sually in spectrograms presented in Fig. 4.

Design and Procedure. The selected 48 colaughter stimuli were amplitude-normalized and presented in random order using SuperLab 4.0 experiment software (www.superlab.com). We recruited 966 participants from 24 soci-eties across six regions of the world (Movie S1); for full demographic in-formation about participants, seeSI Appendix, Tables S5 and S6. For those study sites in which a language other than English was used in conducting the experiment, the instructions were translated beforehand by the re-spective investigators or by native-speaker translators recruited by them for this purpose. Customized versions of the experiment were then created for each of these study sites using the translated instructions and a run-only version of the software. For those study sites in which literacy was limited or absent, the experimenter read the instructions aloud to each participant in turn.

Before each experiment, participants were instructed that they would be listening to recordings of pairs of people laughing together in a conversation, and they would be asked questions about each recording. Participants re-ceived one practice trial and then completed the full experiment consisting of 48 trials. After each trial, listeners answered two questions. The first question was a two-alternative forced-choice asking them to identify the pair as either friends or strangers; the second question asked listeners to judge how much the pair liked one another on a scale of 1–7, with 1 being not at all, and 7 being very much. The scale was presented visually and, in study sites where the investigator judged participants’ experience with numbers or scales to be low, participants used their finger to point to the appropriate part of the scale. All participants wore headphones. For complete text of instructions and questions used in the experiment, seeSI Appendix.

ACKNOWLEDGMENTS. We thank our participants around the globe, and Brian Kim and Andy Lin of the UCLA Statistical Consulting Group.

1. Dale R, Fusaroli R, Duran N, Richardson D (2013) The self-organization of human in-teraction. Psychol Learn Motiv 59:43–95.

2. Provine RR (2000) Laughter: A Scientific Investigation (Penguin, New York). 3. Gervais M, Wilson DS (2005) The evolution and functions of laughter and humor: A

synthetic approach. Q Rev Biol 80(4):395–430.

4. Scott SK, Lavan N, Chen S, McGettigan C (2014) The social life of laughter. Trends Cogn Sci 18(12):618–620.

5. Sauter DA, Eisner F, Ekman P, Scott SK (2010) Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proc Natl Acad Sci USA 107(6): 2408–2412.

6. Provine RR, Fischer KR (1989) Laughing, smiling, and talking: Relation to sleeping and social context in humans. Ethology 83(4):295–305.

7. Smoski MJ, Bachorowski JA (2003) Antiphonal laughter between friends and strangers. Cogn Emotion 17(2):327–340.

8. Vettin J, Todt D (2004) Laughter in conversation: Features of occurrence and acoustic structure. J Nonverbal Behav 28(2):93–115.

9. Grammer K, Eibl-Eibesfeldt I (1990) The ritualisation of laughter. Natürlichkeit der Sprache und der Kultur, ed Koch W (Brockmeyer, Bochum, Germany), pp 192–214.

10. Grammer K (1990) Strangers meet: Laughter and nonverbal signs of interest in op-posite-sex encounters. J Nonverbal Behav 14(4):209–236.

11. Provine RR (1992) Contagious laughter: Laughter is a sufficient stimulus for laughs and smiles. Bull Psychon Soc 30(1):1–4.

12. Luschei ES, Ramig LO, Finnegan EM, Baker KK, Smith ME (2006) Patterns of laryngeal electromyography and the activity of the respiratory system during spontaneous laughter. J Neurophysiol 96(1):442–450.

13. Titze IR, Finnegan EM, Laukkanen AM, Fuja M, Hoffman H (2008) Laryngeal muscle activity in giggle: A damped oscillation model. J Voice 22(6):644–648.

14. Bachorowski JA, Smoski MJ, Owren MJ (2001) The acoustic features of human laughter. J Acoust Soc Am 110(3 Pt 1):1581–1597.

15. Provine RR (1993) Laughter punctuates speech: Linguistic, social and gender contexts of laughter. Ethology 95(4):291–298.

16. Davila Ross M, Owren MJ, Zimmermann E (2009) Reconstructing the evolution of laughter in great apes and humans. Curr Biol 19(13):1106–1111.

17. van Hooff JA (1972) A comparative approach to the phylogeny of laughter and smiling. Nonverbal Communication, ed Hinde RA (Cambridge Univ Press, Cambridge, England), pp 209–241.

18. Bachorowski JA, Owren MJ (2001) Not all laughs are alike: Voiced but not unvoiced laughter readily elicits positive affect. Psychol Sci 12(3):252–257.

19. Kipper S, Todt D (2001) Variation of sound parameters affects the evaluation of human laughter. Behaviour 138(9):1161–1178.

20. Bryant GA, Aktipis CA (2014) The animal nature of spontaneous human laughter. Evol Hum Behav 35(4):327–335.

21. McGettigan C, et al. (2015) Individual differences in laughter perception reveal roles for mentalizing and sensorimotor systems in the evaluation of emotional authen-ticity. Cereb Cortex 25(1):246–257.

22. Szameitat DP, et al. (2010) It is not always tickling: Distinct cerebral responses during perception of different laughter types. Neuroimage 53(4):1264–1271.

23. Jürgens U (2002) Neural pathways underlying vocal control. Neurosci Biobehav Rev 26(2):235–258.

24. Ackermann H, Hage SR, Ziegler W (2014) Brain mechanisms of acoustic communica-tion in humans and nonhuman primates: An evolucommunica-tionary perspective. Behav Brain Sci 37(6):529–546.

25. Ambady N, Bernieri FJ, Richeson JA (2000) Toward a histology of social behavior: Judgmental accuracy from thin slices of the behavioral stream. Adv Exp Soc Psychol 32:201–271.

26. Henrich J, Heine SJ, Norenzayan A (2010) The weirdest people in the world? Behav Brain Sci 33(2-3):61–83, discussion 83–135.

27. Bates D, Maechler M, Bolker B, Walker S (2014) lme4: Linear mixed-effects models using Eigen and S4 R package version 11-7. Available at https://cran.r-project.org/web/ packages/lme4/index.html. Accessed September 9, 2014.

28. R Core Team (2014) R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, Vienna, Austria).

29. Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc, B 67(2):301–320.

30. Williams CE, Stevens KN (1972) Emotions and speech: Some acoustical correlates. J Acoust Soc Am 52(4, 4B):1238–1250.

31. Silk JB (2007) Social components of fitness in primate groups. Science 317(5843): 1347–1351.

32. Bryant GA (2010) Prosodic contrasts in ironic speech. Discourse Process 47(7):545–566.

Bryant et al. PNAS | April 26, 2016 | vol. 113 | no. 17 | 4687

PSYCHOL OGICAL AND COGN ITIVE SC IENCES